Con Espressione!

With the computer program Con Espressione! you can explore the difference between a cold, inexpressive, machine-like reproduction of a musical piece and a more “human” interpretation produced by an AI that was trained on hundreds of recorded performances. This software can be used in your own live event on music & AI, where you can discuss the dimensions and quality of musical expressivity - both human and AI.

With Con Espressione! you can control the tempo and loudness of classical piano pieces by hand movements. In the background, a computer model of expressive performance (called “Basis Mixer”) adds subtle modifications to the performance. You can choose the contribution of the Basis Mixer via an “AI slider”. The higher the value of the slider, the more freedom the machine has to deviate from the prescribed parameters. It adjusts the notes’ loudness, expressive timing and articulation to be slightly different from what you conduct, to make the music more lively and less “mechanical”.

Technical requirements

Con Espressione! consists of three parts:

- The main application window to visualize the hand movements and the current performance parameters.

- The menu application for selecting the composition to play.

- A backend application that applies dynamics, tempo, and timing deviations to the written music of the chosen composition.

The software, instructions for installation and configuration, and background information are available via an online repository.

The main application should be presented to the audience using a projector or a large display. We recommend a small touch screen for the menu application, but it can also be operated using a regular screen and a computer mouse.

The software has been tested to run on Apple macOS computers and Linux PCs. The Intel NUC 8 i5, combined with 8GB of RAM and 120GB of SSD storage, has been used to drive the software during our events.

Hand tracking is achieved using the Leap Motion Controller. The slider that controls the impact of the Expressiveness AI is a custom designed MIDI slider device, but any hardware or software MIDI device that supports MIDI Control Changes should work, too.

In addition, speakers or headphones are needed for audio output.

The software was developed as part of the Con Espressione research project by Prof. Gerhard Widmer and his team from Johannes Kepler University Linz, Austria.

Your own event

The following ideas can be combined to create an attractive live event:

- Invite a pianist to play classical music on stage.

- Discuss expressivity while listening to different interpretations of the same song and describing its character. You can also show the results of two experiments in perceived musical expression via an interactive visualization.

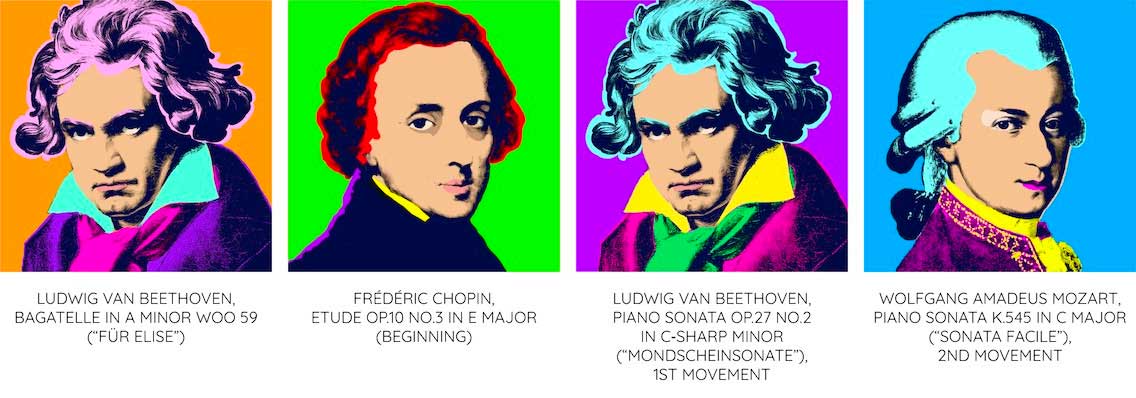

- Show Con Espressione! and let the audience experiment on their own. The following pieces are available:

- Ludwig van Beethoven, Bagatelle in A Minor WoO 59 (For Elise).

- Frédéric Copin, Etude Op.10 No.3 in E Major (Beginning).

- Ludwig van Beethoven, Piano Sonata Op.27 No.2 in C-Sharp Minor (Moonlight sonata), 1st movement.

- Wolfgang Amadeus Mozart, Piano Sonata K.545 in C Major (Sonata Facile), 2nd movement.

- Discuss musical expressivity with musicians, other invited guests, and the audience.

MUSKI event in Heidelberg

Together with our friends from the MAINS (Mathematik-Informatik-Station), we organized the event Con Espressione! Über Künstliche Intelligenz und lebendige Musik on October 23, 2022 at 11:00 am in Heidelberg. Gerhard Widmer, Carlos Cancino Chacón, and colleagues from Johannes Kepler University Linz presented insights into their research on musical expressivity and showed their latest experiment on how machines can communicate and play live music together with humans. With support from Yamaha we featured a computer grand piano. The Con Espressione! program was shown, and visitors could try it out.

Get in touch with us and share experiences on your own events. We are happy to assist you in preparing your event and to announce them via our networks!

External links

- Con Espressione research project (EN)

- Talk “Computer Accompanist: Breaking the Wall to Computational Expressivity in Music” by Gerhard Widmer at Falling Walls Berlin (EN)

- Gerhard Widmer on Expressive Music Performance, the Boesendorfer CEUS, and a MIDI Theremin (DE, English subtitles)

- Research project Whither Music? Exploring Musical Possibilities via Machine Simulation (EN)

Credits

The research behind the Con Espressione! software was/is funded by the European Research Council (ERC), under the European Union's Horizon 2020 research and innovation programme, grant agreements No 670035 ("Con Espressione") and 101019375 ("Whither Music?").

Authors of Con Espressione!: Gerhard Widmer, Stefan Balke, Carlos Eduardo Cancino Chacón and Florian Henkel / Johannes-Kepler-Universität Linz, Institute of Computational Perception, Austria.

In collaboration with Christian Stussak, Eric Londaits, and Andreas Matt (IMAGINARY).